ETA-Dispatch Coordination

TL;DR

Led cross-functional initiative coordinating ETA policies with the auto-dispatch algorithm. Designed multi-variate experiments balancing cost savings against user experience. Generated $49.5M in annual savings and a 10% increase in bundled orders.

My Role: Product Manager II → Senior PM

- Mapped how ETA inputs affected dispatch's optimization objective function

- Designed multi-variate experiment testing lever combinations across three teams

- Wrote functional and technical requirements for new experiment infrastructure

- Defined success criteria balancing cost savings, user experience, and care costs

- Partnered with engineering to align on tech debt trade-offs and system priorities

- Led final variant selection decision based on trade-off analysis

The Challenge

Grubhub's fulfillment cost per delivery was a key business metric. The math was compelling: even an average of 1 cent reduction per delivery, across 500K+ daily deliveries, compounds to massive annual savings.

The team was under pressure to hit cost reduction targets. Bundled orders—assigning multiple deliveries to one courier—were a known lever because they reduce the fulfillment cost per delivery in the bundle.

When I joined the ETA team, exploratory experiments had already suggested that longer ETAs led to more bundling. The hypothesis was intuitive: more time slack gives the dispatch system more opportunity to bundle orders together.

But "just make ETAs longer" wasn't simple. ETAs are a core input into the auto-dispatch algorithm, and changing them created cascading effects across the entire system.

Understanding the System

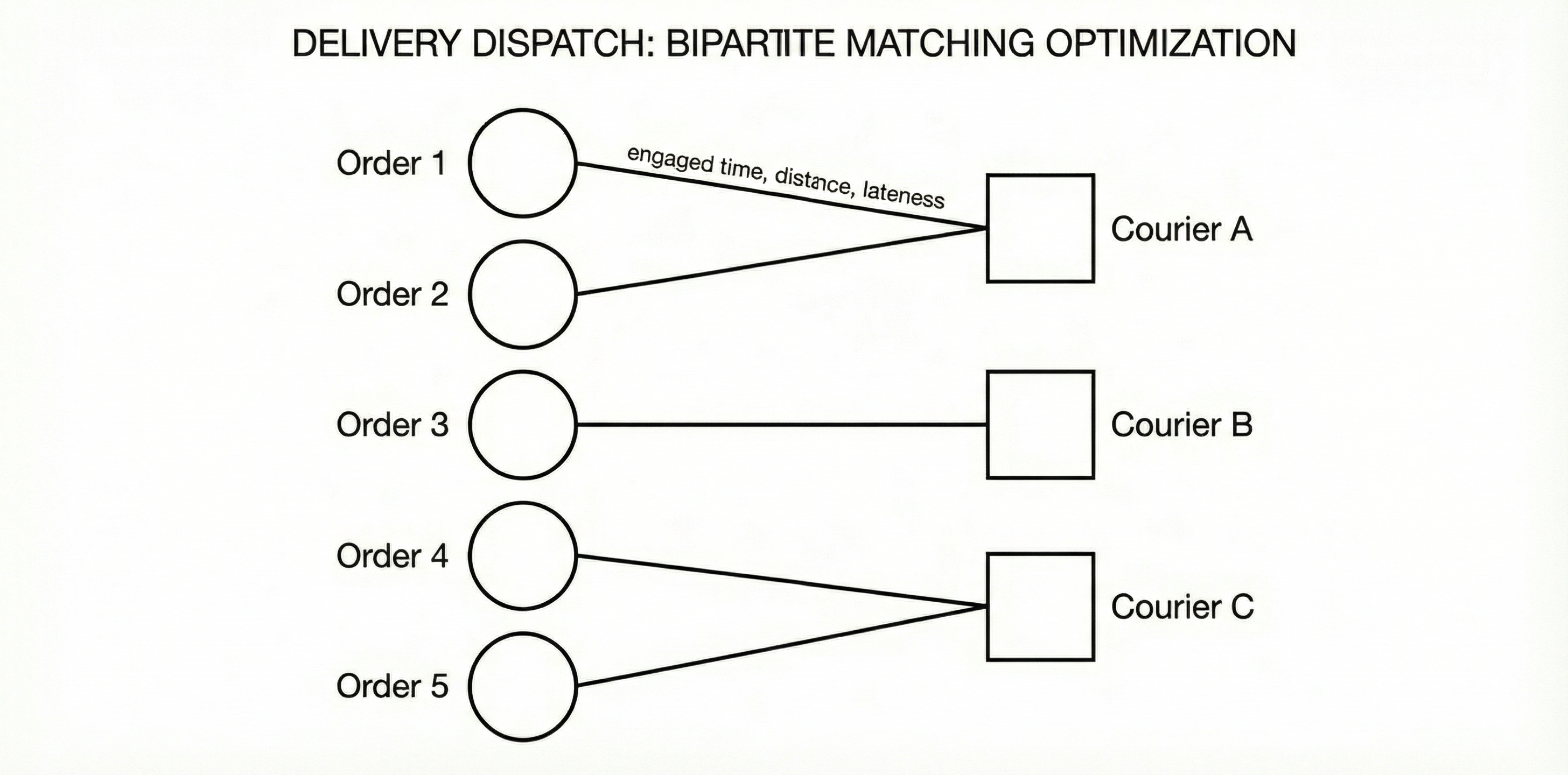

To identify the right solution, I first needed to understand how dispatch actually used ETAs. Auto-dispatch is a microservice that optimally assigns orders to couriers. Technically, it's a bipartite matching problem:

- One side of the graph: Orders waiting for assignment

- Other side: Available couriers

- Edges: Decorated with distance, time to restaurant, arrival time relative to ETA, and other factors

- Solver: Mixed integer programming optimizer that minimizes total cost of all assigned edges within milliseconds

The objective function factored in multiple variables, including a "lateness penalty"—a function of how late the courier would arrive relative to the promised ETA.

This was the key insight: ETAs aren't just user-facing promises—they're a core input to the optimization. If an ETA is tight, dispatch prioritizes on-time arrival, limiting flexibility. If an ETA has slack, dispatch can optimize for other objectives—like bundling orders together.

This created the opportunity: strategically adding slack to ETAs could shift dispatch behavior toward more bundling, without changing the underlying ML predictions or the dispatch algorithm itself.

The Approach

Identifying the Levers

Working with counterparts on the Dispatch and Capacity Management teams, I mapped out multiple levers:

| Lever | Team | Mechanism |

|---|---|---|

| Extend ETAs by X minutes | ETA (my team) | Add buffer time to ML-generated ETA predictions before showing to users |

| Add "engaged minutes" to dispatch objective | Dispatch | New variable measuring courier time-efficiency per delivery; directly incentivizes bundling by making multi-drop routes more attractive |

| Capacity management intervention | Capacity | Complementary lever with smaller expected impact |

The "engaged minutes" lever was particularly interesting: if dispatch minimized engaged minutes as part of its objective function, it would naturally favor bundled assignments. However, this could also reduce the algorithm's emphasis on lateness—potentially leading to more late deliveries.

Validation: Lightweight to Heavyweight

Rather than jumping straight to a large, risky experiment, we validated incrementally:

- Tested ETA extensions in isolation: measured effect on fulfillment cost per delivery

- Tested dispatch objective changes separately: measured effect on lateness and bundling rates

Phase 2 — Combined multivariate experiment: After seeing promising signals, we combined levers into a single experiment. This was critical because both levers affect the network—their combined impact isn't simply additive. We needed to measure interaction effects to understand the true opportunity.

Building the Experiment Infrastructure

We had never run this type of cross-system experiment before. I wrote functional and technical requirements for new capabilities:

- Selective ETA extension: Ability to extend ETAs by configurable amounts for experiment variants

- Dispatch integration: Ensuring extended ETAs propagated correctly through the system

- Experiment targeting and measurement: Infrastructure to assign users to variants and track fulfillment cost, late %, care contacts, and bundling rates

Experiment Design

My job was to design the multivariate experiment while balancing competing goals:

Primary goal: Decrease fulfillment cost per delivery

- Maintain acceptable late delivery rate

- Keep care costs under threshold

- Define success criteria and acceptable trade-off thresholds before seeing results

- Coordinate engineering teams across three domains (ETA, Dispatch, Capacity)

- Work with data science on power analysis and experiment duration

- Align operations on business goals while representing user experience

Navigating Trade-offs

Partnering with Engineering on Tech Debt

The engineering team raised legitimate concerns about the implementation approach:

| Concern | Example | Validity |

|---|---|---|

| Implementation cleanliness | Adding X minutes to ML predictions wasn't architecturally elegant | Valid |

| Tech debt | New code paths would require ongoing maintenance | Valid |

| Edge cases | A 10-min ETA becoming 20-min feels wrong; a 50-min ETA becoming 60-min may be unnecessary | Valid |

Rather than treating these as obstacles, I worked with engineering to understand the risks. We asked: what's the cost of adding this tech debt? Does it outweigh the potential gains from validating the hypothesis faster?

Together, we aligned on priorities: validate the hypothesis first, then invest in clean architecture if results justified it. We agreed to ship the experiment with a commitment to redesign the system properly next quarter.

This wasn't a compromise—it was the right product decision. Spending months on clean architecture before knowing if the experiment would work would be premature optimization.

Defining "Success" Upfront

There was extensive debate about acceptable trade-offs. The dispatch team worried that optimizing for engaged minutes could lead to more late deliveries. Operations cared about care costs. I was balancing user experience against cost savings.

Rather than debating abstractly, I worked with stakeholders to quantify acceptable thresholds before seeing results:

- How much late delivery increase was acceptable given the fulfillment savings?

- What care cost increase would negate the efficiency gains?

Having pre-agreed thresholds made the final decision defensible.

Results

We tested multiple variants combining different levels of ETA extension with different dispatch objective weights. I led the decision on which variant to ship based on our pre-agreed thresholds.

| Metric | Result |

|---|---|

| Annual savings | $49.5M |

| Bundled orders increase | 10% |

| Care costs | Kept under threshold |

Selected variant: Maximized cost savings while keeping care cost impact within acceptable bounds. We accepted some increase in late deliveries, offset by significant fulfillment savings—a deliberate choice made with full visibility into the trade-offs.

What I Learned

Understanding ML Systems as Inputs, Not Outcomes

The key insight from this work: model accuracy is an output metric, not an outcome metric.

The ML-generated ETA predictions had inherent error, but users didn't care about prediction error—they cared about late deliveries and broken promises. By understanding how ETA predictions flowed into the dispatch optimization, I could improve user outcomes (late deliveries within acceptable thresholds) and business outcomes ($49.5M savings) without changing the ML models themselves.

This required understanding the full system, not just individual components.

Partnering with Engineering on Technical Trade-offs

The engineering team had legitimate concerns about tech debt. Rather than treating this as an obstacle, I worked with them to:

- Quantify the risk of adding more tech debt to the system

- Align on priorities: when to push forward for business outcomes vs. when to pause for system health

- Make a joint decision we could all stand behind

The agreement to ship now and redesign next quarter was the right call. We validated the hypothesis first, then invested in clean architecture once we knew the approach worked.

Making Decisions with Incomplete Information

We couldn't measure everything we wanted to. Some impacts were hypotheses we didn't have the means to test in this experiment. We made the best decision with available information, shipped, and learned.

This is the reality of product work: you rarely have perfect data. The goal is to reduce uncertainty enough to make a defensible decision, not to eliminate uncertainty entirely.